August 4, 2017 - 2 min read

Kaggle competition : pseudo-labelling efficiency in regression tasks

I needed some training on regression problems for a project. I luckily found out that the Kaggle Mercedes Benz competition; which aims at the development of a model that predicts how long a car in the manufacturing process stays on the test bench; just had started.

I am starting to get a much better grasp on models and how they work one by one. Having in mind to learn new approaches and tricks I tried several published kernels and among them one from Hakeem which showed to me one new way on how to stack models and go beyond :

- Feature engineering

- Run and optimize xgboost.

The following step was to try to get better performances, I did try quite a few things (deep learning based models, many different combination of features, optimization of hyperparameters, …) as I am starting to pile up a fair amount of courses and tutorials followed. I unfortunately don’t have the time to report them all, but one try allowed me to get a surprisingly good result on the private leaderboard : pseudo-labelling that I learned about following the excellent fast.ai courses. Here follows the code that led me to my best position :

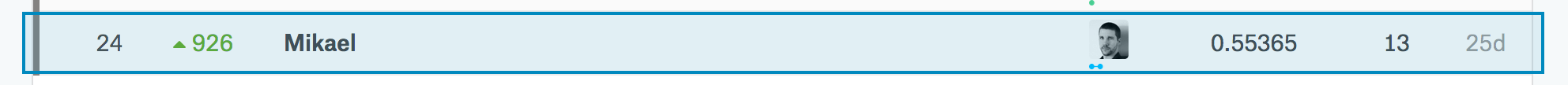

As you can see in the following screenshot, I ended up 24th out of 3835 participants with a massive jump of 926 ranks. One thing should be noted is that many participants were using leaderboard probing in order to know which data points were used for the public leaderboard. This means that many were actually having overfitting models to get a good ranking on the public leaderboard but they lost their rank massively one confronted to the private leaderboard. I decided it was not that interesting and decided to trust my cross-validation results having learned already a nice way to construct stacked models.

Why did pseudo-labelling help so much ? Pseudo-labelling is mostly used in classification problems helping to better separate classes. In a regression problem, it is not just directly applicable. Apart from providing more data points (which is not negligible), one part of the model is a random forest. When used for regression tasks, random forests are basically constructing trees that “classify” in small bins of target values. So pseudo-labelling in this case could have helped to better separate these bins.