February 15, 2021 - 3 min read

How to test data science models acceptability?

Putting a model in production is not only about how many requests per second it can handle

As a product/digital/business manager, you were diligent and you set a data science project running that follows a carefully established strategy (who said 5 D’s?). But now, you have a thrilled data scientist or machine learning engineer who is explaining to you how his/her model has an impressive accuracy with a given validation strategy and you should start to use it in production. Can it be that simple?

The evaluation of a machine learning model is something that is usually done as it is the only way to actually show that the model is doing well. Roughly, it means that you used some part of the data to assess how well the model is doing accordingly to some predefined metric (quite often the winner is accuracy). I will not go into the details of it here, but I like Jeremy Jordan’s post or Maarten Grootendorst’s writing on validation strategy, if you want to go deeper.

Now, imagine your tech team spent a fair amount of energy to develop a credit risk model, the evaluation shows that everything looks green! Making a quick shortcut, it runs in production, bank advisors start using the nice user interface that was designed for it. Just by filling a simple form, they get an assessment for the risk the bank is taking if the loan is validated. After a few months, they start to notice that they can hack a little the model, but only for the clients they like. You certainly see where this is going. This is recipe for catastrophe. What was missing?

Putting a model into production should also be about making sure it never predicts something that the business can not handle, in other words increase the model’s acceptability. Uncertainty and Sensitivity analysis can help you with that. It’s not only me, in a recent Nature comment (i.e. short article), leading scientists in the field recommend such analysis in order to build models that are useful to society :

“Mind the assumptions. Assess uncertainty and sensitivity.”

So, how does it work?

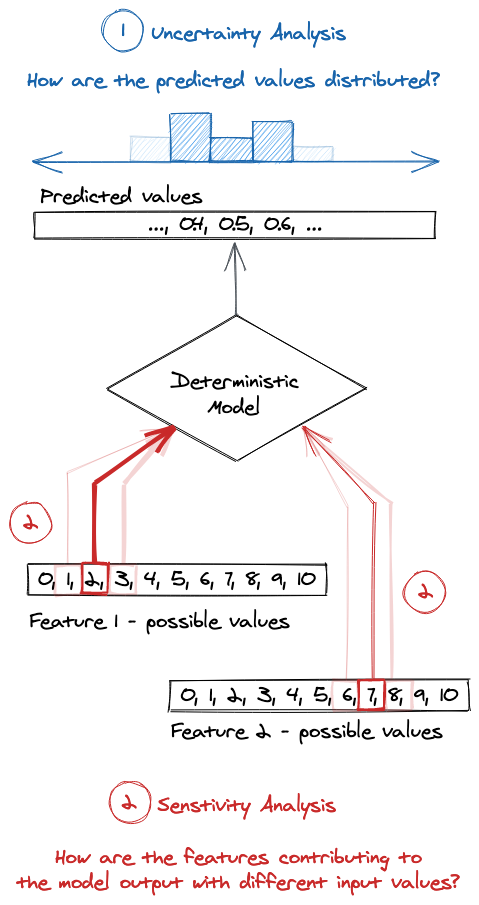

With uncertainty analysis (see next figure, part 1) you assess the model’s output when you change some of its knobs or its input. You try to get a general idea of how uncertain, spread the model’s output is. A model is as much about the data that is used for the task than it is about tuning, adjusting it. Some parameters of the model can be uncertain, allowed to vary. The input of a model can also face huge shifts. These are what you try to measure.

In sensitivity analysis (see next figure, part 2), you measure the model’s output response due to some input assessing the contribution of mentioned input. Some might argue this is model testing and it is not. In many testing strategies that comes from engineering practices, you verify that the model, the software, has an expected behaviour. When given undefined, null or set values, it is replying as you would expect. With sensitivity analysis, you take into account a range of input values that is as wide and complete as possible and test the output when they are combined and assess potential combinatory effects.

In the credit risk example, does it outputs anything that would be harmful? Would it in some unexpected conditions predict you could give millions to someone who has a history on unpaid debts but thousands in saving?

As data science is making progress, many practices are settling down. Among them data scientists are expected to be much more equiped with good engineering practices in order to easen the workload that is required before putting anything into production. Scribbling into notebooks is not good enough. While this is good, articles still regularly show up sharing stories about models blind spots and ethically dubious behaviors. Making more extensive use of tools such as sensitivity analysis can drastically help to limit them. Next time you plan and scope a data science project, would you be willing to include some extra time to make sure that the model behaves as it should? Making the model more acceptable?

If you have ideas / examples of tools that help to make better models, please share them with a comment below 👇

Resources

- Sensitivity analysis for risk-related decision-making - Slides by E. Marsden.

- Why so many published sensitivity analyses are false: A systematic review of sensitivity analysis practices by A. Saltelli.

- Book on sensitivity analysis by A. Saltelli.

Libraries for python aficionados:

I mostly develop in python, the next three libraries are useful to work on the explainability of your models: